Одна из первых попыток создать синтетическую речь была предпринята более двухсот лет назад, в 1779 году, прусским профессором Кристианом Кратценштейном. Изобретатель построил в своей петербургской лаборатории акустические резонаторы. Устройство состояло из нескольких вибрирующих язычков, которые были по акустике похожи на человеческий голосовой аппарат. Это устройство могло искусственно воспроизводить пять длинных гласных.

Спустя 12 лет, в 1791 году, изобретатель из Вены по имени Вольфганг фон Кемпелен построил более сложную машину, смоделированную на основе различных человеческих органов, которые позволяют синтезировать речь. У машины были пара сильфонов для имитации легких, вибрирующий тростник в форме голосовых связок, кожаная трубка для голосового тракта, две ноздри, кожаные язычки и губы. Управляя формой кожаной трубки и положением языков и губ, фон Кемпелен мог производить как согласные, так и гласные звуки.

Спустя пол века, в 1838 году, английский учёный Роберт Уиллис обнаружил связь между отдельными гласными звуками и строением речевого тракта человека. Это вдохновило других исследователей на изобретение устройств голосового синтезатора. Одно из них было создано Александром Грэхемом Беллом и его отцом в конце XIX века.

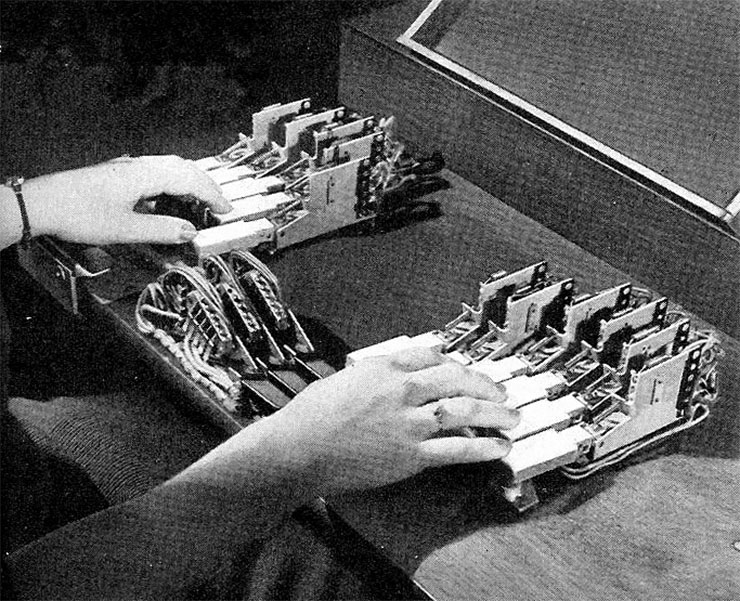

На Всемирной выставке 1939 года в Нью-Йорке Гомер Дадли представил первый в мире электрический синтезатор речи VODER (Voice Operating Demonstrator). VODER работал по тому же принципу, что и современные устройства на основе модели речи «источник — фильтр». Правда, четкость генерируемого им голосового потока оставляли желать лучшего.

Оператор выбирает один из двух основных звуков, используя для этого запястья: гудящий звук и шипящий звук. Гудение было основой для гласных и носовых звуков. Шипящий звук предназначался для тех звуков, которые связаны с согласными. Эти звуки затем передавались через систему фильтров, которые были выбраны пользователем путем выбора соответствующих кнопок на клавиатуре. Эти звуки были объединены и воспроизводились через громкоговоритель. Для звуков, не воспроизводимых жужжанием или шипением, таких как «п», «д», «й» и «х», можно было выбрать дополнительные фильтры. Разные слова могли быть объединены в разные предложения при помощи манипулирования клавишами и звуками. Можно было добавить различные выражения и высоты тона (управляемые ножной педалью) в зависимости от типа задаваемого вопроса.

Затем было время формантных синтезаторов, таких как PAT (Parametric Artificial Talker), OVE (Orator Verbis Electris) и элементарных артикуляционных синтезаторов, например, DAVO (динамического аналога речевого тракта).

Следующим этапом развития генератора речи стала разработка в 1968 году в Японии Норико Умедой и его коллегами первой в мире полноценной системы преобразования текста в речь для английского языка. С тех пор специальные устройства стали генерировать разборчивую речь.

В 80-х и 90-х годах для синтеза речевого потока стали широко использовались нейронные сети и скрытые марковские модели. С помощью этих инструментов исследователи стремились получить более сложные звуки, которые были бы максимально схожи с голосом человека.

Современные технологии синтеза голоса развиваются за счет методов, основанных на генеративно-состязательных сетях (GAN).

Экономический эффект искусственного воспроизведения речи очевиден (например, при производстве видеоигр или аудиокниг). Сейчас основной упор делается на том, чтобы упростить использование нескольких голосов, звучащих по-разному. Некоторые виртуальные помощники, например, Alexa и Siri, требуют больших пакетов данных для создания индивидуального голоса. Разрабатываются алгоритмы, как эмоционального озвучивания текста, так и генерация речи необходимого тембра, темпа и других характерных признаков, присущих конкретным людям.