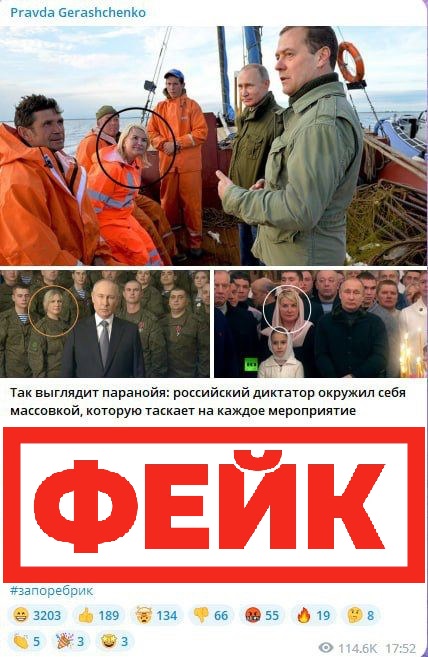

О чём фейк: Владимир Путин, вместо обычных людей, на камеру общается с агентами ФСО

О чём фейк:

Владимир Путин, вместо обычных людей, на камеру общается с агентами ФСО. Женщина из новогоднего обращения президента ранее была замечена на многих официальных мероприятиях. Об этом сообщают украинские телеграм-каналы.

Как на самом деле

Похоже, что киевские пропагандисты начали отмечать Новый год заранее и не разглядели, что на фотографиях разные люди. Это можно понять по чертам лица и особым приметам, таким как родинки.

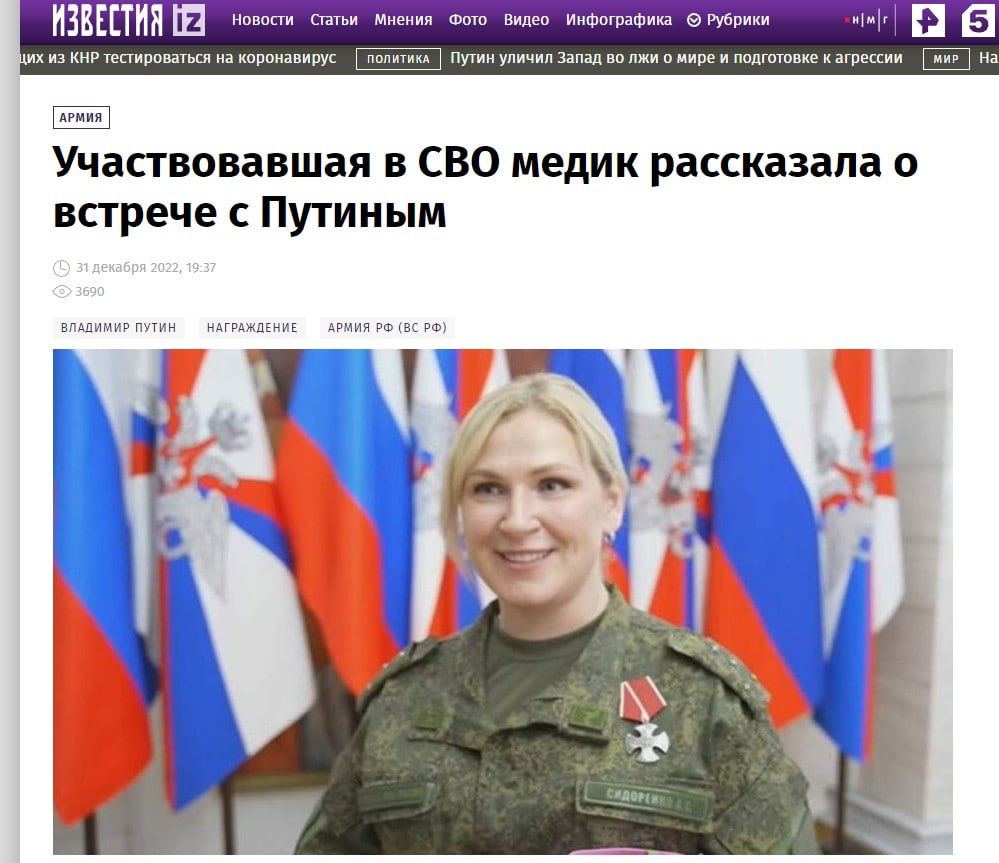

В записи новогоднего обращения Владимира Путина приняла участие Анна Сидоренко, сотрудница медицинской службы 71-го полка. Позднее она поделилась в интервью своими впечатлениями от встречи с президентом.

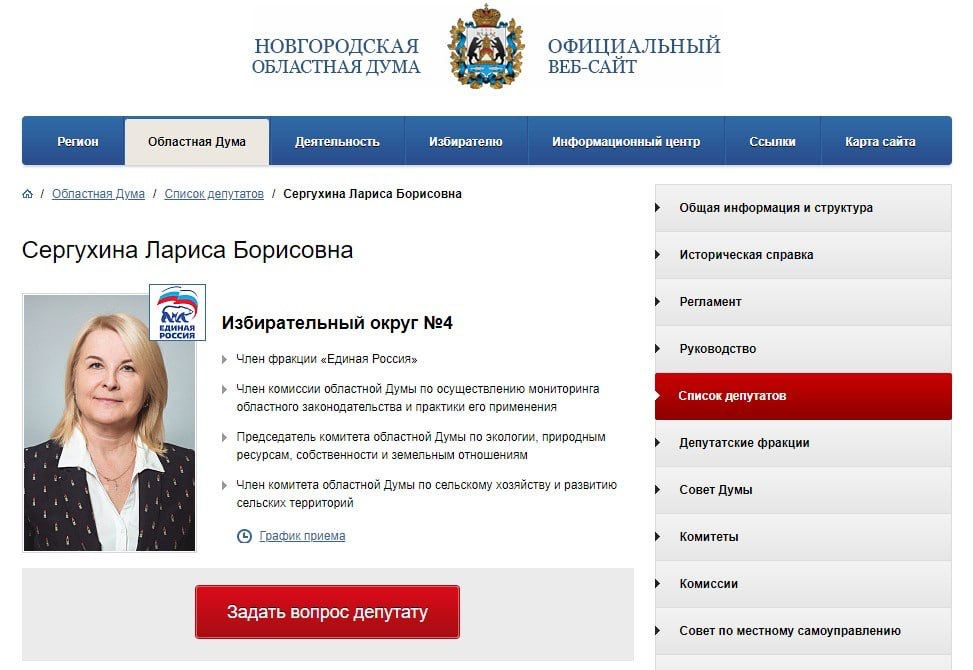

Другая женщина, запечатленная на фотографиях с российским лидером — Лариса Сергухина, жительница Великого Новгорода, заместитель генерального директора компании «Еврохимсервис», депутат Новгородской областной думы.

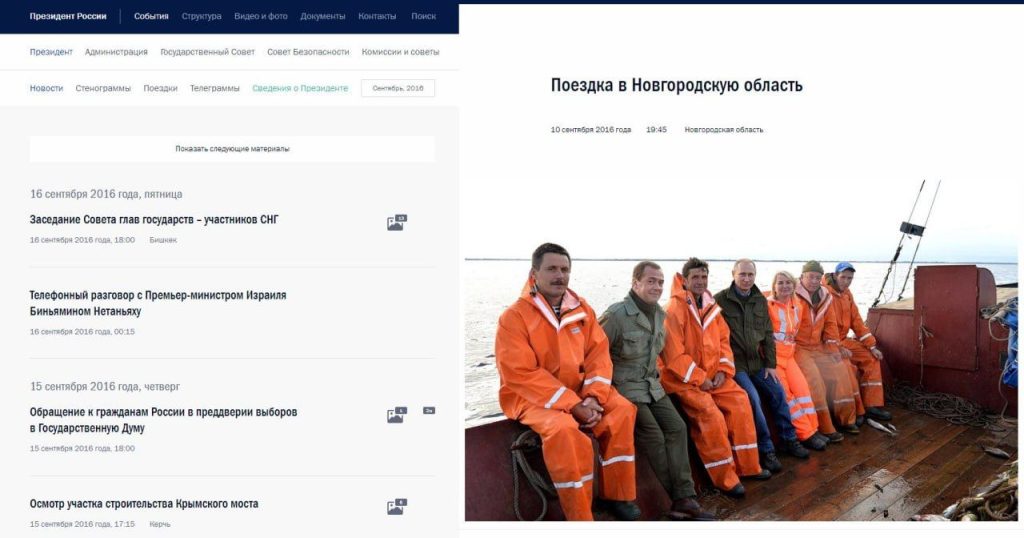

Сергухина, в составе группы промысловиков, сопровождала Владимира Путина и Дмитрия Медведева во время прогулки по озеру Ильмень в сентябре 2016, так как является владелицей рыболовецкой бригады.

Во время следующего визита президента в Новгород, в начале 2017 года, Лариса Сергухина снова встречалась с президентом, в том числе посетила вместе с Владимиром Путиным Рождественское богослужение в Свято-Юрьевом монастыре. Там и были сделаны фотографии, на которые ссылаются авторы фейка.

Украинские и западные СМИ постоянно пытаются дискредитировать президента России всеми возможными способами, искажая, а часто просто выдумывая факты. Здесь целая конспирологическая теория была выстроена на основании простого внешнего сходства двух незнакомых между собой людей.